[zotpress items=”{9687752:I3X6ZSIP}” style=”apa”]

Introduction

Cloud computing, defined as the delivery of computing services over the internet, offers innovative solutions by providing access to servers, storage, databases, networking, software, analytics, and intelligence. This technology enables faster innovation, flexible resource management, and significant economies of scale, transforming the way industries operate (Krauss et al., 2023). Its significance is rapidly expanding across various sectors, including scientific research, where it holds tremendous potential to revolutionize geophysical and seismological studies (Krauss et al., 2023).

What is Cloud Computing?

Cloud computing can be categorized into three main types of services:

Infrastructure as a Service (IaaS)

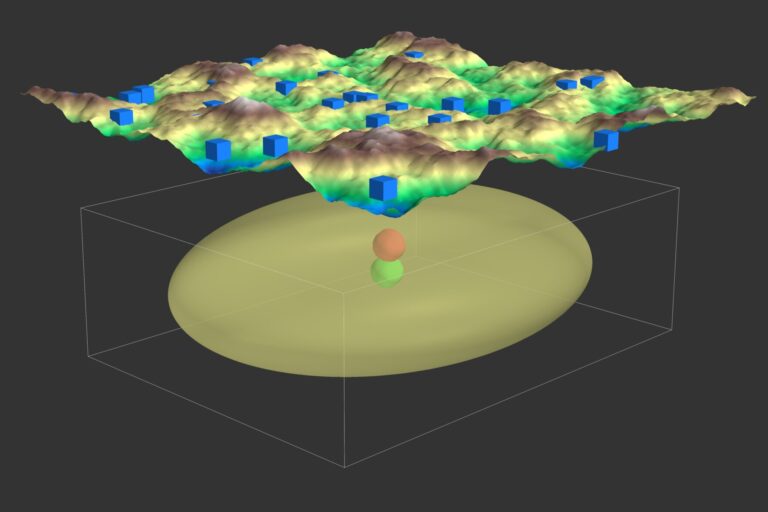

IaaS provides virtualized computing resources over the internet, allowing users to rent servers and storage space. This model is ideal for researchers who require large-scale computing power and storage without the need to maintain physical hardware (Eos.org, 2019). For example, a university research team conducting a study involving the processing of large volumes of seismic data collected from various monitoring stations around the world can use Amazon Web Services (AWS) Elastic Compute Cloud (EC2). By renting virtual servers through EC2, the team can access the necessary processing power and storage capacity to handle their computations. They can easily scale resources up or down depending on their data processing needs, making IaaS a flexible and cost-effective solution.

Platform as a Service (PaaS)

PaaS offers a platform that includes hardware and software tools delivered over the internet, primarily for app and software development. It simplifies the development process by providing a managed environment where researchers can build, test, and deploy applications quickly and efficiently (Eos.org, 2019). For example, a group of seismologists developing a new application to analyze seismic activity data in real-time can utilize Google Cloud’s App Engine, a PaaS solution that offers a fully managed environment for developing and deploying applications. With App Engine, the team can focus on writing code and building features without the need to manage the underlying infrastructure, such as servers, networking, or scaling issues, allowing them to bring their application to market more quickly.

Software as a Service (SaaS)

SaaS delivers software applications over the internet on a subscription basis, enabling users to access and use software without managing the underlying infrastructure. This model is particularly beneficial for deploying specialized seismic software without the overhead of maintenance and updates (IEEE, 2024). For example, an organization dedicated to monitoring seismic activity globally can subscribe to a SaaS application like QuakeML, which provides tools for analyzing and visualizing seismic data over the internet. By using QuakeML, the organization can access the software’s capabilities directly through their web browsers without the need to install or manage the software on their own servers. This approach reduces the maintenance burden and ensures they are always using the latest version of the software with updated features and security patches.

Additionally, cloud deployment models include:

- Public Clouds: Public clouds are operated by third-party cloud service providers and deliver their computing resources, such as servers and storage, over the internet. Public clouds offer flexibility and cost-effectiveness, though they may have limitations in terms of security and compliance. For example, a geophysical research institute can use Microsoft Azure, a public cloud service, to store and process large datasets from seismic monitoring stations. By leveraging Azure’s scalable resources, the institute can handle the varying computational demands of their research without investing in physical hardware. While the public cloud provides flexibility and cost-effectiveness, the institute must carefully manage data security and compliance, particularly when dealing with sensitive information.

- Private Clouds: Private clouds are exclusive to a single organization and offer greater control, security, and customization. They are ideal for organizations with strict regulatory requirements or those that handle sensitive data. For example, a government agency focused on earthquake monitoring can operate its own private cloud to manage seismic data collection and analysis. Given the sensitive nature of the data, the agency can prefer to maintain full control over the infrastructure, ensuring that all data processing and storage meet strict security and regulatory requirements. The private cloud allows the agency to customize its environment to meet specific needs while maintaining a high level of data protection.

- Hybrid Clouds: Hybrid clouds combine public and private clouds, allowing data and applications to be shared between them. This model offers greater flexibility and optimization of existing infrastructure while maintaining security and compliance for critical workloads (IEEE, 2024). For example, a university’s seismology department can use a hybrid cloud approach for its research projects. The department runs sensitive data analysis on a private cloud within the university’s data center to ensure compliance with data privacy regulations. However, for less sensitive tasks, such as running large-scale simulations or temporary storage, the department can utilize Amazon Web Services (AWS), a public cloud. This hybrid setup can allow the department to optimize resource use, balancing the need for security with the cost-efficiency and scalability of public cloud resources.

Benefits of Cloud Computing for Geophysical and Seismological Research

Scalability and Flexibility

Cloud platforms provide virtually unlimited computational resources that can be scaled according to research needs. In seismology, where data processing demands can vary significantly based on the size and complexity of datasets, cloud computing offers a flexible and responsive solution. For instance, during a seismic event, researchers can quickly scale up resources to analyze large amounts of real-time data, and then scale down once the analysis is complete (Eos.org, 2019).

Cost-Effectiveness

By reducing the need for expensive on-premises hardware, cloud computing allows research teams to pay only for the resources they use, thereby optimizing their budgets. This cost-effectiveness is particularly advantageous for smaller research teams or projects with limited funding, as it lowers the financial barrier to accessing high-performance computing resources (Krauss et al., 2023).

Collaboration and Data Sharing

Cloud platforms facilitate global collaboration by providing centralized data storage and sharing capabilities. This is particularly beneficial for projects like global seismic networks, where data from various locations need to be aggregated and analyzed in real-time. Cloud computing enables seamless collaboration among researchers worldwide, allowing them to share insights and findings more efficiently (Eos.org, 2019).

Data Storage and Management

The vast amounts of data generated in geophysical research pose significant challenges in terms of storage and management. Cloud storage solutions offer a range of options, including tiered storage, where less frequently accessed data can be stored at a lower cost. This not only reduces storage expenses but also ensures that critical data remains accessible when needed (Zhu et al., 2023).

Advanced Analytics and Machine Learning

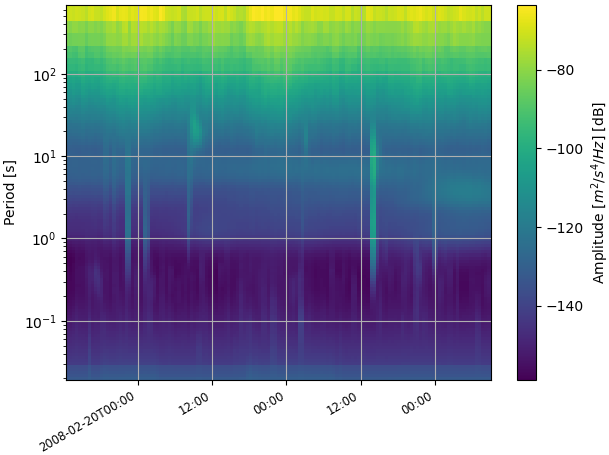

Cloud platforms provide access to powerful tools for data analysis, including machine learning and AI services. In seismology, these tools can be applied to real-time earthquake detection, pattern recognition in seismic data, and predictive modeling. For example, machine learning algorithms hosted on cloud platforms have been used to improve the accuracy of seismic event detection, enabling more timely and accurate alerts (Zhu et al., 2023).

Case Studies and Examples

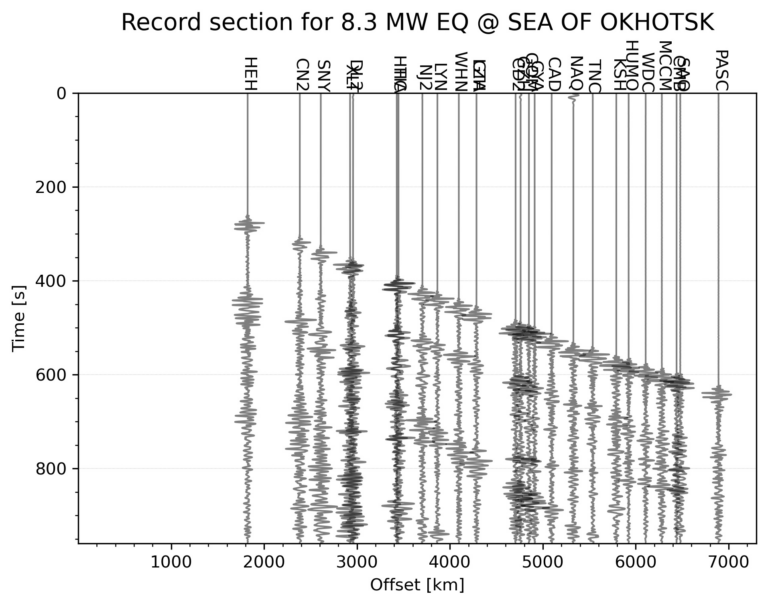

- Real-Time Earthquake Monitoring:

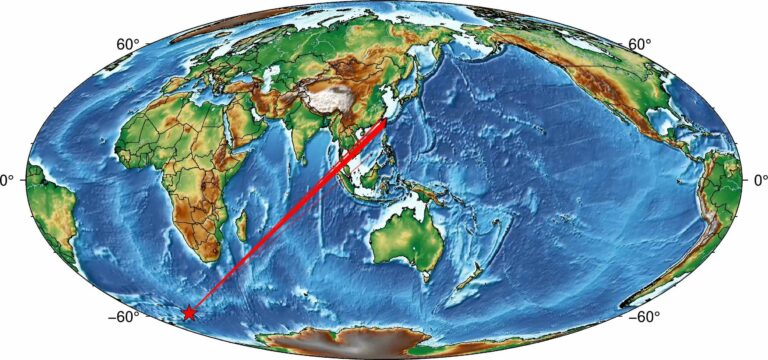

Cloud computing has been instrumental in developing systems that process and analyze data from numerous sensors simultaneously, providing faster and more accurate alerts. For instance, the use of cloud-based platforms has enabled real-time processing of seismic data, reducing the time between data acquisition and alert generation, which is crucial for early warning systems (Eos.org, 2019). - Global Seismic Data Repositories:

Cloud-based repositories like the Incorporated Research Institutions for Seismology (IRIS) play a crucial role in storing and providing access to seismic data on a global scale. These repositories allow researchers to access and analyze data from diverse seismic events, facilitating cross-comparison and collaborative studies that enhance our understanding of seismic phenomena (Zhu et al., 2023). - Machine Learning in Seismology:

The application of cloud-based machine learning algorithms has significantly improved the accuracy of seismic event detection from large datasets. These algorithms can analyze patterns in seismic data that might be missed by traditional methods, offering a more robust and precise approach to seismic monitoring (Krauss et al., 2023).

Challenges and Considerations

- Data Security and Privacy:

Ensuring data security and privacy is paramount when using cloud services, especially in fields handling sensitive or proprietary data. Researchers must carefully evaluate the security measures provided by cloud service providers and consider additional layers of encryption or access controls to protect their data (Krauss et al., 2023). - Cost Management:

While cloud computing offers cost savings, it is essential to monitor and manage costs proactively to avoid unexpected expenses. Researchers should utilize budgeting tools provided by cloud platforms to track usage and costs in real-time, ensuring that their projects remain financially viable (Krauss et al., 2023). - Dependency on Internet Connectivity:

Reliable internet connections are necessary for accessing cloud services, which can be challenging in remote research locations. Researchers must plan for contingencies, such as local data storage and processing capabilities, to ensure continuity of operations in case of connectivity issues (Krauss et al., 2023).

Conclusion

Cloud computing offers significant benefits to the geophysical and seismological community, including scalability, enhanced collaboration, and access to advanced analytical tools. Despite the challenges, the advantages of cloud computing make it an invaluable resource for researchers, encouraging the exploration of these technologies to enhance research capabilities and drive innovation in the field (Krauss et al., 2023).

If you use this information then please cite this work as:

[zotpress items=”{9687752:I3X6ZSIP}” style=”apa”]

References

[zotpress items=”{9687752:VRI7ZA67},{9687752:7Q3HRPSX},{9687752:SSMCZG4P},{9687752:RMI89K9C}” style=”apa”]

e29439